Sim-to-Lab-to-Real: Safe Reinforcement Learning with Shielding and Generalization Guarantees

Kai-Chieh Hsu* Allen Z. Ren* Duy Phuong Nguyen Anirudha Majumdar** Jaime F. Fisac**

*equal contribution in alphabetical order **equal advising

Princeton University Artificial Intelligence Journal (AIJ), October 2022

Progress and Challenges in Building Trustworthy Embodied AI Workshop, NeurIPS 2022

Oral, Generalizable Policy Learning in the Physical World Workshop, ICLR 2022

Journal | Paper | Code | Bibtex

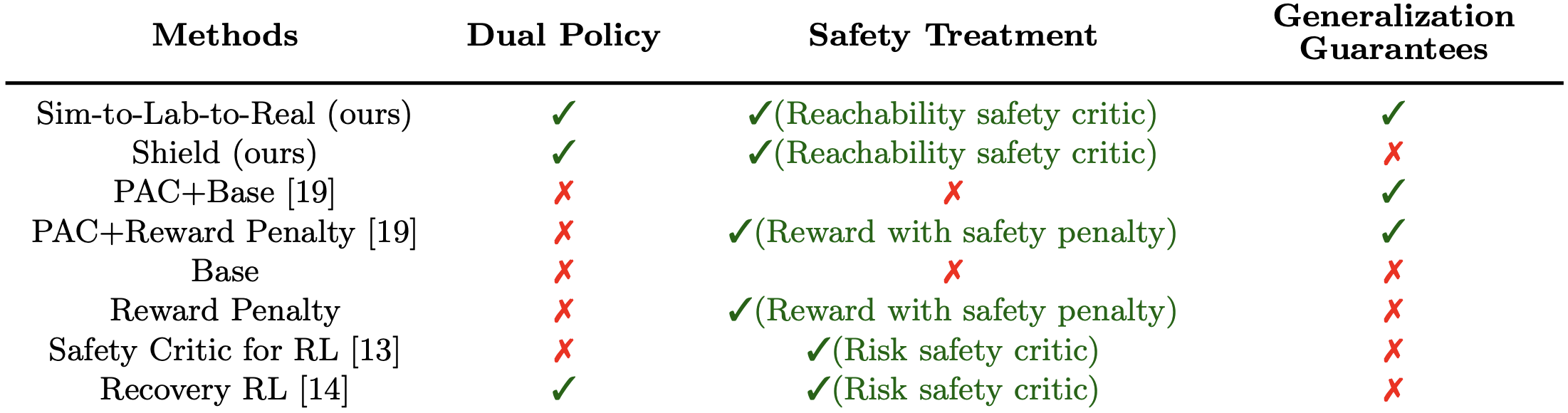

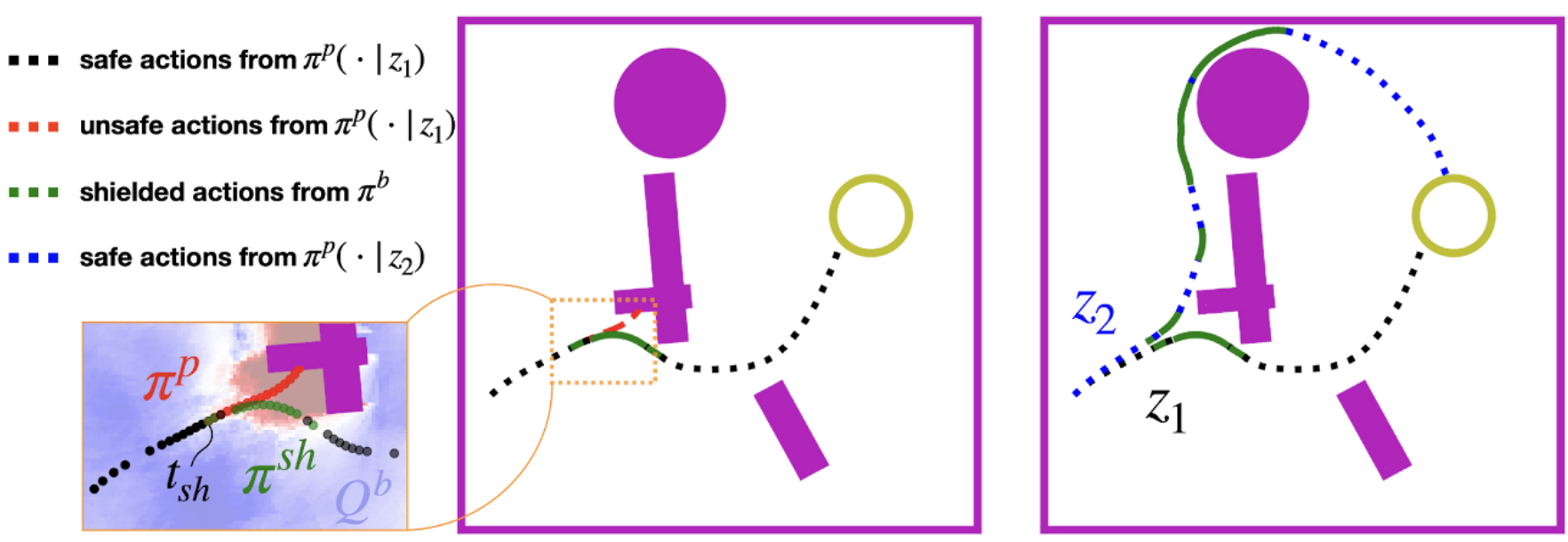

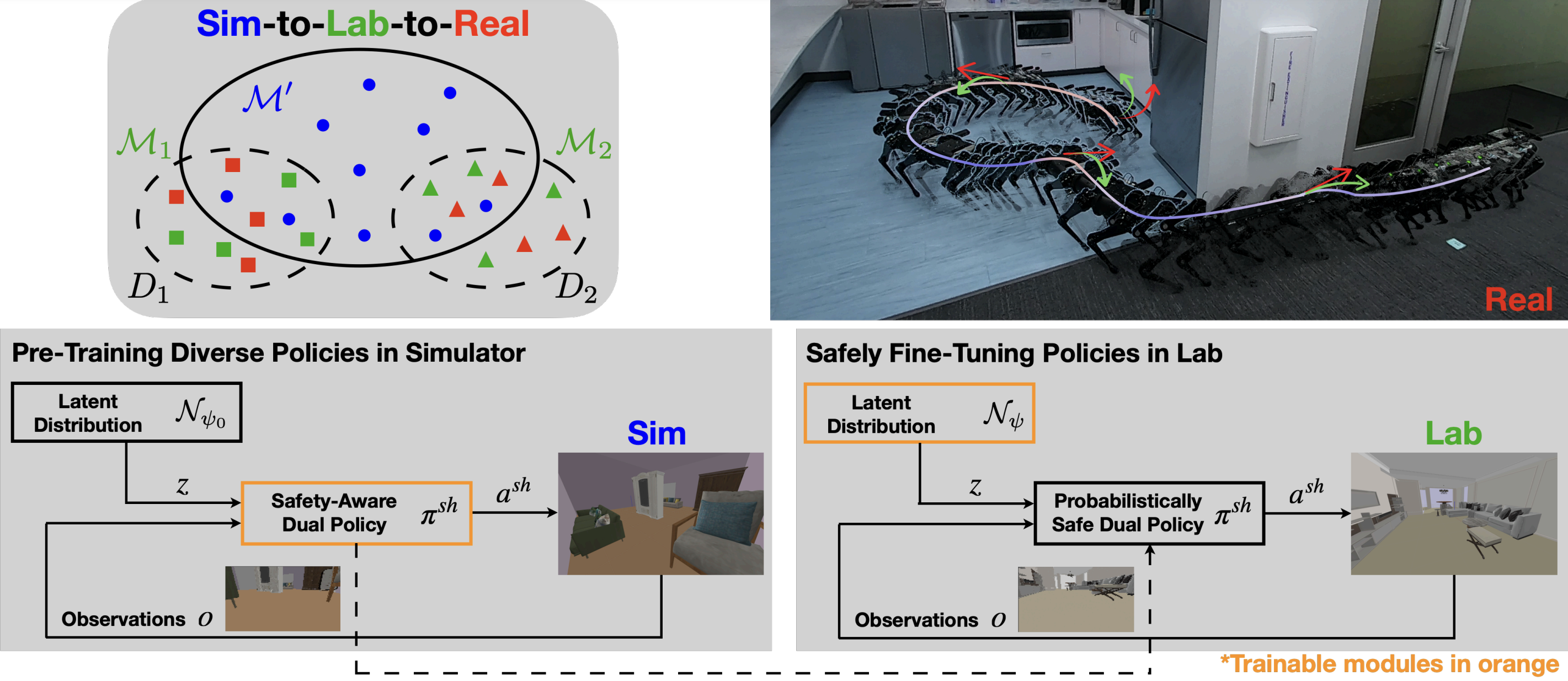

We propose Sim-to-Lab-Real, a framework that combines Hamilton-Jacobi reachability analysis and the PAC-Bayes Control framework to improve safety of robots during training and real-world deployment, and provide generalization guarantees on robots’ performance and safety in real environments. |

Method Overview

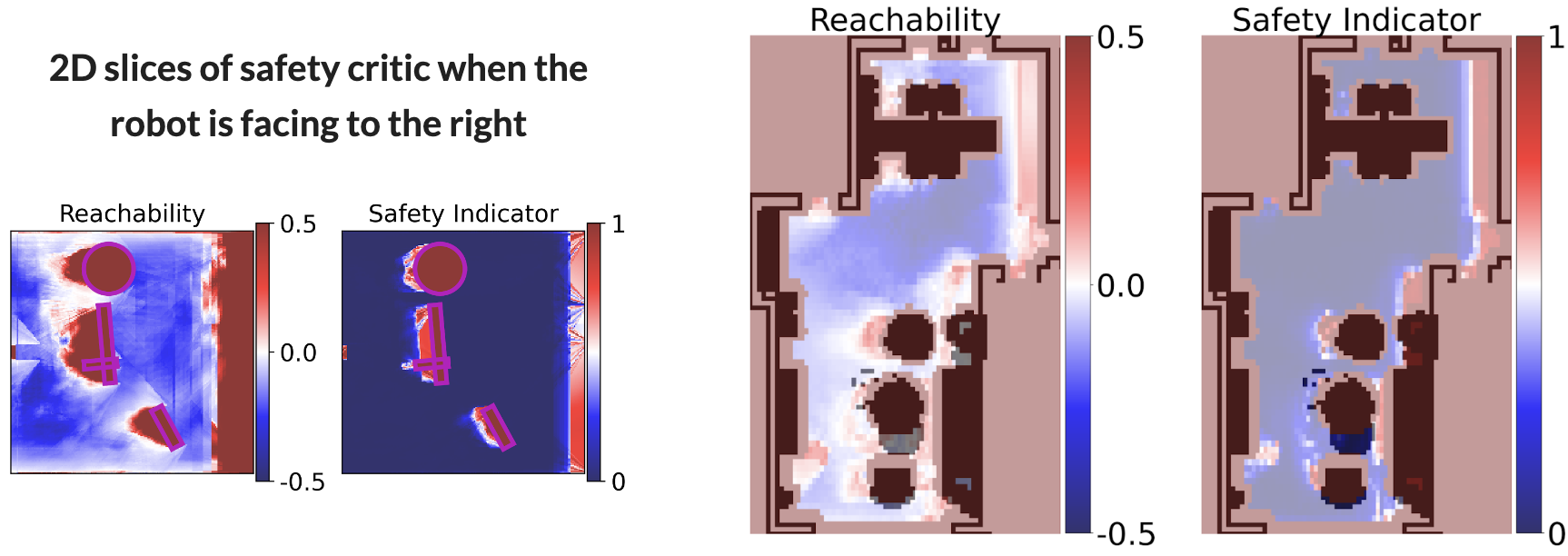

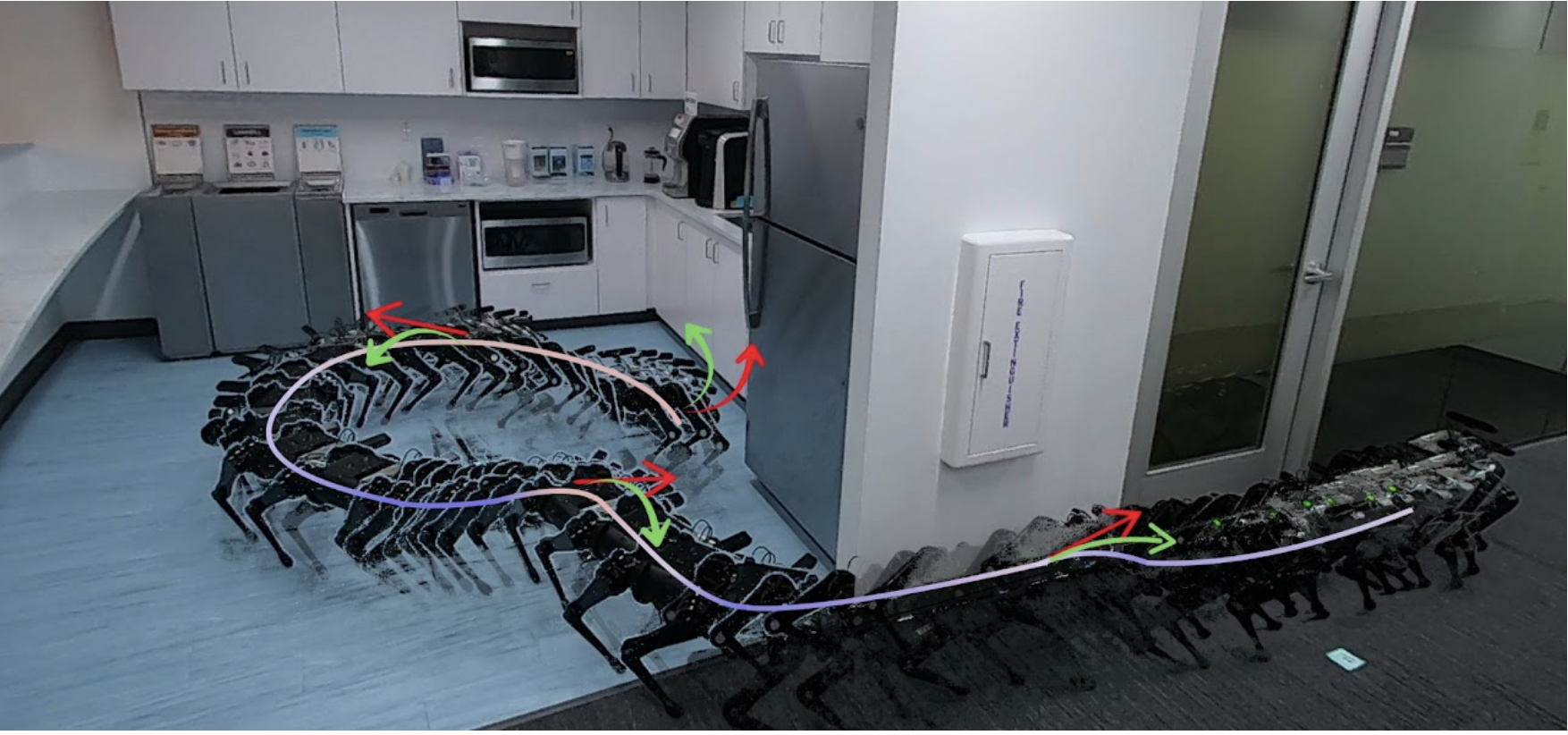

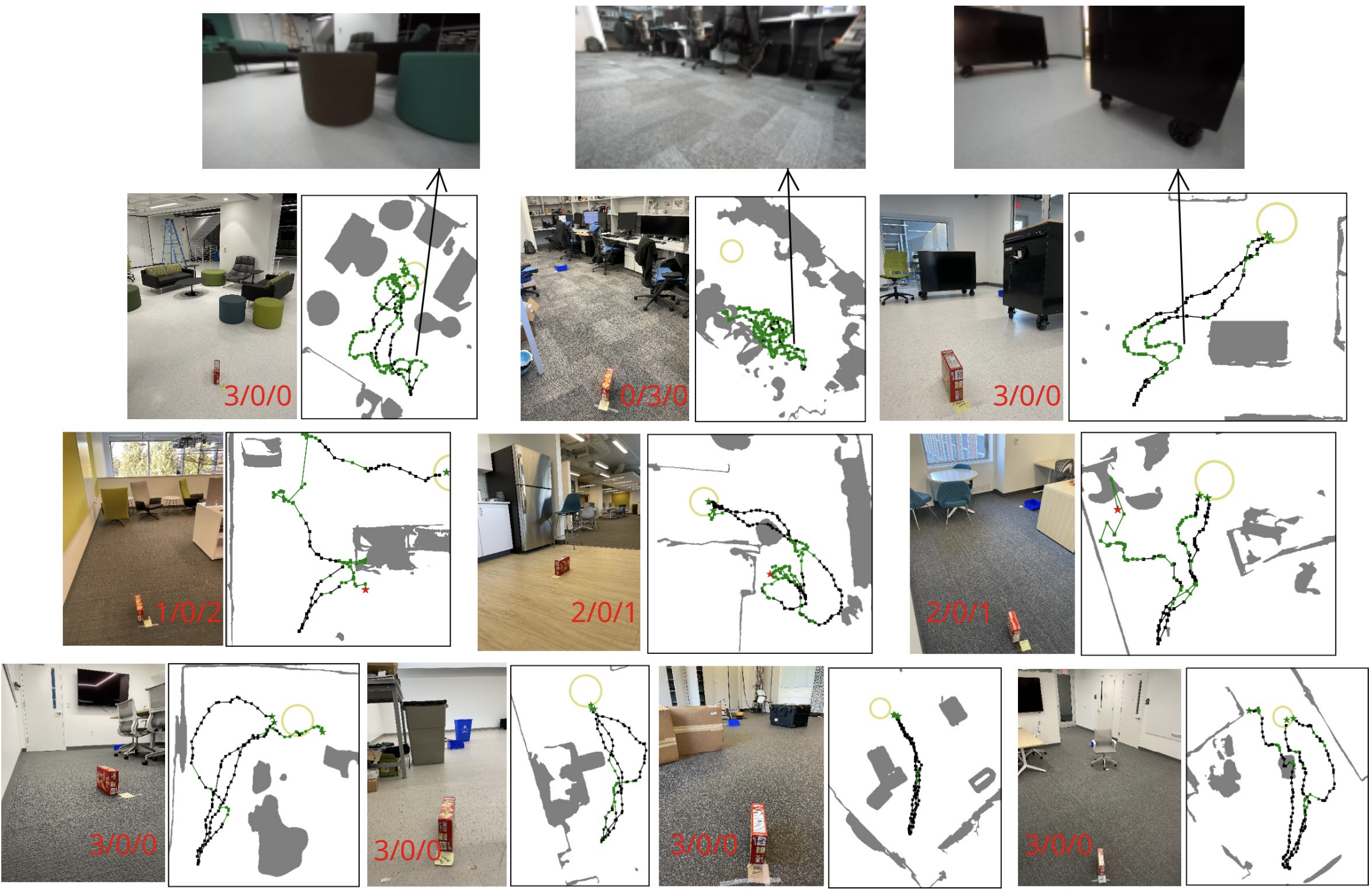

We leverage an intermediate training stage, Lab, between Sim and Real to safely bridge the Sim-to-Real gap in ego-vision indoor navigation tasks. Compared to Sim training, Lab training is (1) more realistic and (2) more safety-critical.

|

|

Acknowledgements

Allen Z. Ren and Anirudha Majumdar were supported by the Toyota Research Institute (TRI), the NSF CAREER award [2044149], the Office of Naval Research [N00014-21-1-2803], and the School of Engineering and Applied Science at Princeton University through the generosity of William Addy ’82. This article solely reflects the opinions and conclusions of its authors and not ONR, NSF, TRI or any other Toyota entity. We would like to thank Zixu Zhang for his valuable advice on the setup of the physical experiments. |